Building a Culture of Optimization, Part 3: Know the Math

In part 3 of this 5-part blog series about ‘Building a Culture of Optimization’ I’m going to talk about the importance of bringing your organization up to speed on the math behind the tests. You can look back and see part 1 on the basics and part 2 on good test design.

Part 3: Know the Math! Give your peers a short stats lesson (but keep it light)!

What does someone running an A/B or MVT test need to know about math? How detailed should they be? Here is what I tell all of my coworkers:

Statistical Confidence = confidence in a repeated result

The confidence level, or statistical significance indicate how likely it is that a test experience’s success was not due to chance. A higher confidence indicates that:

– the experience is performing significantly different from the control

– the experience performance is not just due to noise

– If you ran this test again, it is likely you would see similar results

Confidence interval = a range within the true value that can be found at a given confidence level

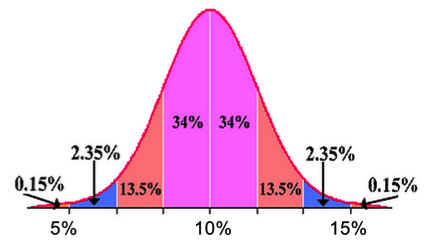

Example: An experience’s conversion rate lift is 10%, it’s confidence level is 95% and it’s confidence interval is 5% to 15%. If you ran this test multiple times, 95% of the time, the conversion rate would fall between 5% to 15%

What impacts confidence interval?

– Sample size – as a sample grows the interval will shrink or narrow

– Standard deviation or consistency – similar performance over time reduces standard deviation

In the diagram below: While the range may seem wide, the vast majority (68%) of results will be centered around the mean of 10%

That’s it. That’s all people need to know.

In reality though, it’s not enough because it doesn’t go into details of how to calculate statistical confidence, but there are plenty of online tools to help with that. In my organization I give people a spreadsheet with a confidence calculator built in, all they have to do is plug in their numbers.

The real takeaway here, however, is that statistical confidence is important. I don’t actually care if my marketers know how to calculate it or even care to. All I really need them to know is that there is something mathematical that makes a difference for tests and that they should ask about it. In fact, after I gave a recent presentation on this topic, one of my coworkers said after that by tomorrow he’d forget what this stuff means, but he’d remember he should ask me about it. Mission accomplished!

Part 4 of this 5-part blog series on ‘Building a Culture of Optimization’ will focus on evangelizing your program, so stay tuned!

Happy testing!

Brian Lang

Hi Krista!

Thanks for the post!

While I don’t expect most marketers to understand the underlying math, I do think they need to be aware of the implications on when/why a significance calculator would mislead them (read: calculator says you’ve reached significance, when in fact you haven’t).

Statistical significance (through the p-value) only gives the probability of observing results as or more extreme, if there truly was no difference. You need to account for statistical power to be able to detect a difference, should it truly exist. The majority of A/B test tools and statistical significance calculators make the assumption that you have fixed the point at which you will run your significance test (based on minimal detectable effect, power, etc) in advance – from what I’ve seen, most marketers don’t do this, and instead repeatedly check for significance until it is reached. This is problematic, because if you are willing to wait and collect enough data, you will ALWAYS “reach significance”, even when there truly is no effect.

Thanks again for the post!

Brian

Pingback: Building a Culture of Optimization, Part 4: Evangelize the Process | Marketing Insights

Pingback: Mit A/B Tests die Conversion-Rate steigern – Praxisbeispiel für Onlineshops auf WordPress-Basis » MarketPress Deutschland

Pingback: Building a Culture of Optimization, Part 5: So You’ve Found a Big Win… Now What? | Marketing Insights

vishwanath sreeraman

Hi Krista,

What’s the min. confidence level that you set for your tests? Is it recommended to change this based on the test or sample size? Are there any guidelines? We usually go with 90% for all of our tests.

Thanks.

vishwanath sreeraman

bloggerchica

I usually recommend 95% but it does depend on the test, the organization, etc. You should figure out what your own organization is comfortable with in terms of risk to reward of a lower confidence interval.